Overview¶

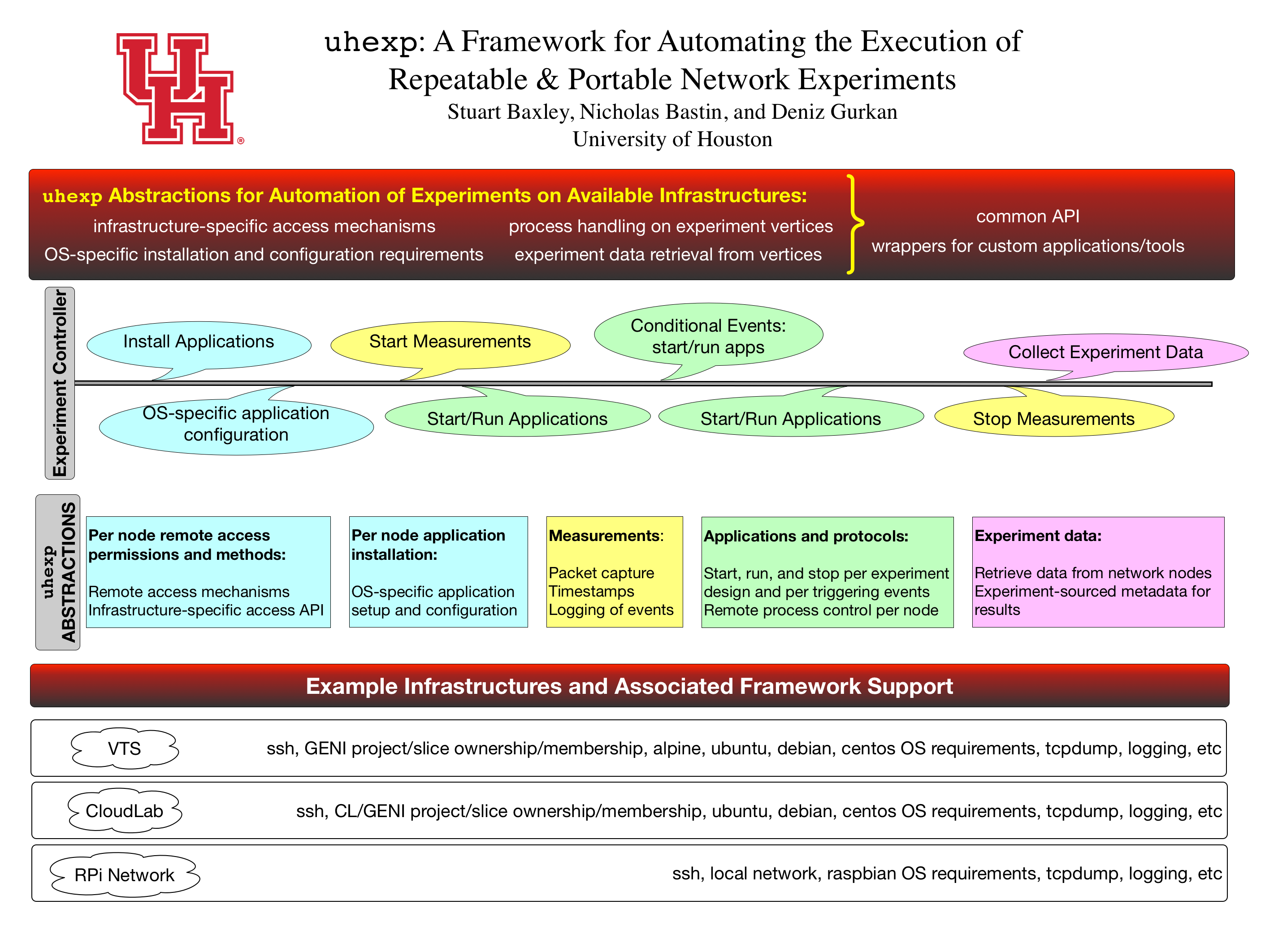

This project is a collection of reference experiments that are templates for various

reservation and execution scenarios for networking research. These templates use

the uhexp that automates the repeatable and portable execution of networking

research experiments.

This repository consists of a minimum viable experiment example to be used as a reference,

with a client and a server node connected through a layer 2 Ethernet bridge. Client runs

curl to retrieve a large file from the apache web service running on the server

node while tcpdump is used at client and server side to capture packets. In addition,

there is a monitoring node that is connected to the span port of the bridge that is

capturing packets received from bridge span port.

This overview uses this reference experiment to describe the capabilities.

Experiment Overview¶

There are three phases of an experiment:

Build topologies (most examples here are with a reservation) on an infrastructure where the observations will take place for an experiment,

Run the experiment based on the scenarios that are derived by the experimenter on testing a given research hypothesis, and

Data analysis of all observations for verification of the particular test scenario within the hypothesis and validation of the methods applied in the test scenario, [JAIN1990], [WHITE2002].

This documentation focuses on the first two phases since the reference experiments

and the uhexp provides the abstractions for the orchestration of

running an experiment.

More information is available on how to design research experiments in networking

at various publications such as [EDWARDS2015].

Topology Building Phase¶

The experiments start with a topology setup on an infrastructure of choice for the experimenter. Example infrastructures are:

a local network of IoT nodes,

RPis, or

other infrastructure where compute and networking resources may be available.

The uhexp assumes that the building and setup of the topology results in an

ssh config file that can be available for the run phase of the experiment.

The compute resources in each infrastructure may have various access requirements

along with their associated API. The basic ssh config file based access requires that

the user is able to setup an ssh key-based access to these resources which is

available in most infrastructures.

An infrastructure-specific API is utilized in the reserve.py python programs

wherever there is an orchestration library available for the given infrastructure.

For example, the reference experiments in this repo utilize the geni-lib Python library for interacting with the NSF GENI Federation for

reservations on the NSF GENI Federated Testbed and the

NSF CloudLab. Building of topologies with compute and

networking resources in other infrastructures may involve a manual wiring and

configuration with custom a control network for the experimentation.

Attention

The reservation should be based on the experiment design. For example, if a span

port should be enabled on a particular bridge in the experiment topology, this

may not be possible during the runphase and consecutively, experiment cannot be

executed with the desired observations. The experiment design phase is accomplished by

the experimenter before starting any experiment, [EDWARDS2015], and this phase is

outside the scope of these documents.

Reference experiments in this repository have a reserve.py python program whenever

the infrastructure may allow for orchestration of the topology build through an API.

For resources such as a set of IoT devices, RPi nodes, or other local devices, the

topology is built by manually configuring the compute and networking according to

the custom experiment requirements.

Experiment run Phase¶

Given the ssh config file from the topology over a given infrastructure with compute and networking resources, the experimenter’s

design of the hypothesis test scenario dictates the experiment run workflow.

A networking research workflow may be composed of the following steps:

Gather information about the operating systems (OS)

Install OS-specific versions of applications

OS-specific application configuration

Gather information about the system configuration before experiment run

Start measurements at observation points of choice for the experiment scenario

Start or run applications

Note

A

tcpdumpprocess is started or stopped, whereas anftpapplication may run for a pre-determined or limited time and stop by itself.Act on any conditional start/stop/run per event during the experiment

Start or run other applications where applicable

Stop measurements at observation points and save data

Gather information about the system configuration after experiment run

Collect observation and experimental state data

These steps are listed as a general guideline for how to compose the experimental test

scenario and may vary depending on the experimental design. All templates for reference

experiments have a run.py python program that utilizes the uhexp.

Portability¶

The portability of uhexp is demonstrated in the reference experiment with the 4-node

topology, three clients and one web server connected by a bridge. This reference experiment

is run over three different infrastructures:

Virtual Topology Services (VTS)

RPi devices in an Ethernet Network

Each topology has an ssh config file for their end nodes and the template python

program runexp.py is the same to run the same experiment for all three

infrastructures. For a different infrastructure, uhexp provides the requisite abstraction

methods to add application and measurement handling along with the experiment controller

APIs.

Repeatability¶

Reference experiments can be conducted at any future time, under any set of network impairments, on any one of the supported operating systems running on end nodes, and repeatedly improving the fidelity of the data collected as well as new investigations with different perspectives. Each experiment run can be configured with a different duration for the run time, different triggers for conditional actions on prescribed events as well.

- JAIN1990

Jain, Raj. The art of computer systems performance analysis: techniques for experimental design, measurement, simulation, and modeling. John Wiley & Sons, 1990.

- WHITE2002

White, Brian, Jay Lepreau, Leigh Stoller, Robert Ricci, Shashi Guruprasad, Mac Newbold, Mike Hibler, Chad Barb, and Abhijeet Joglekar. “An integrated experimental environment for distributed systems and networks.” ACM SIGOPS Operating Systems Review 36, no. SI (2002): 255-270.

- EDWARDS2015(1,2)

Edwards, Sarah, Xuan Liu, and Niky Riga. “Creating repeatable computer science and networking experiments on shared, public testbeds.” ACM SIGOPS Operating Systems Review 49, no. 1 (2015): 90-99.